Published on

- 5 min read

Your cache is not protected from cache stampede

This article is presented as a part of C# Advent 2025.

Do you have a database query or a paid API call, and you cache the result? Are you using ConcurrentDictionary or MemoryCache for caching?

Caches built on these classes have one problem: lack of protection against cache stampede. Under certain load, the cache will execute the same request multiple times due to lack of coordination between threads and replicas. In this article, I’ll show you how cache stampede affects C# applications and what you can do about it.

What is cache stampede

Imagine we’re caching the result of a long database query. Clients access this result dozens of times per second. While the value is in the cache, everything is fine: each request simply reads it from memory.

The problem starts when the cache expires. If the cache doesn’t know how to coordinate parallel requests to the same key, dozens of threads simultaneously go to the database for the same value. A similar situation can happen after application restart, when the cache is not yet warmed up and is empty.

As a result, instead of one “heavy” operation, you get dozens. This is called cache stampede.

Benchmarks

I compared the behavior of ConcurrentDictionary, MemoryCache, and the relatively new HybridCache in two scenarios:

- IO-bound – simulating a database query or external API call.

async Task<int> IOBoundOperation(CancellationToken ct)

{

// Simulate IO operation

await Task.Delay(200, ct);

// Simulate 1 KB memory allocation

var result = new byte[1024].Length;

return result;

}

- CPU-bound – simulating “heavy” computations.

Task<int> CPUBoundOperation(CancellationToken _)

{

// Simulate CPU operation

var result = 0;

for (int i = 0; i < 200_000_000; i++)

{

result += i % 7;

}

return Task.FromResult(result);

}

The number of parallel requests varies from 1 to Environment.ProcessorCount * 2 (number of logical processors).

Measured parameters:

- Median execution time — median benchmark execution time.

- Allocated memory — how much memory was allocated.

- Operations executed — how many times the IO-bound or CPU-bound operation was actually called (ExecuteOperation method).

Full benchmark code and results are in the repository.

Testing ConcurrentDictionary

A cache based on ConcurrentDictionary usually looks like this:

-

Try to read the value by key using TryGetValue. If the value exists, return it.

-

If not, execute the “heavy” operation, save the result through TryAdd and return it.

if (!_dictionary.TryGetValue(CacheKey, out var existing))

{

var value = await ExecuteOperation(Operation, CancellationToken.None);

_dictionary.TryAdd(CacheKey, value);

return value;

}

return existing;

It’s important to understand that TryGetValue and TryAdd methods are thread-safe, but they only protect us from races within individual operations. There’s no thread synchronization between TryGetValue and TryAdd.

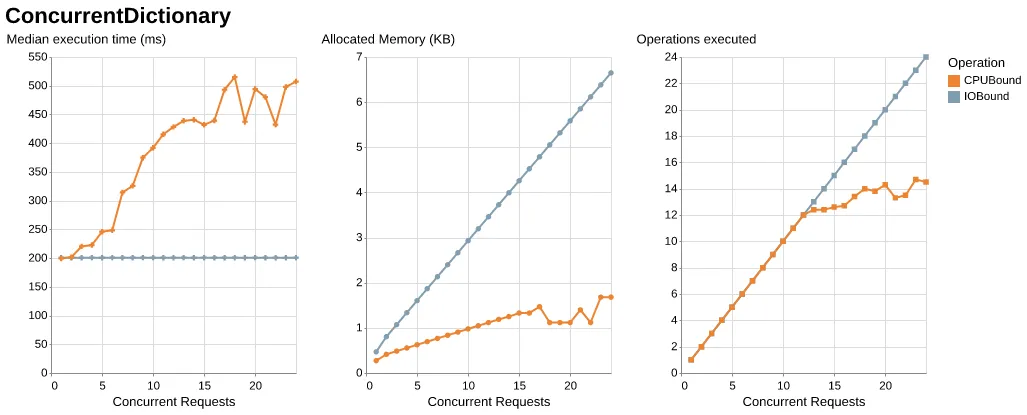

As a result, we see:

-

For CPU-bound operations, as the number of parallel requests grows, the median time increases by about 2-3 times. The Operations executed value grows up to 15.

-

For IO-bound operations, the number of executions grows linearly: as many parallel requests, as many times ExecuteOperation is executed.

ConcurrentDictionary also has a GetOrAdd method that seems atomic. The benchmark code could be rewritten like this:

private readonly ConcurrentDictionary<string, Task<int>> _dictionary = new();

return await _dictionary.GetOrAdd(CacheKey, _ =>

{

return ExecuteOperation(Operation, CancellationToken.None);

});

But if you look at the GetOrAdd implementation, it’s no different from the example with TryGetValue and TryAdd above. That means it also doesn’t guarantee a single call to the passed delegate. This non-obvious behavior is discussed in this GitHub issue.

Testing MemoryCache

MemoryCache also has a convenient GetOrCreateAsync method:

return await _memoryCache.GetOrCreateAsync(CacheKey, async entry =>

{

return await ExecuteOperation(Operation, CancellationToken.None);

});

And, like in ConcurrentDictionary, at first glance it might seem that when using it, the ExecuteOperation method will be called only once. But if you look at the GetOrCreateAsync source code, you can see that there’s no cache stampede protection there. This is confirmed by the benchmark results.

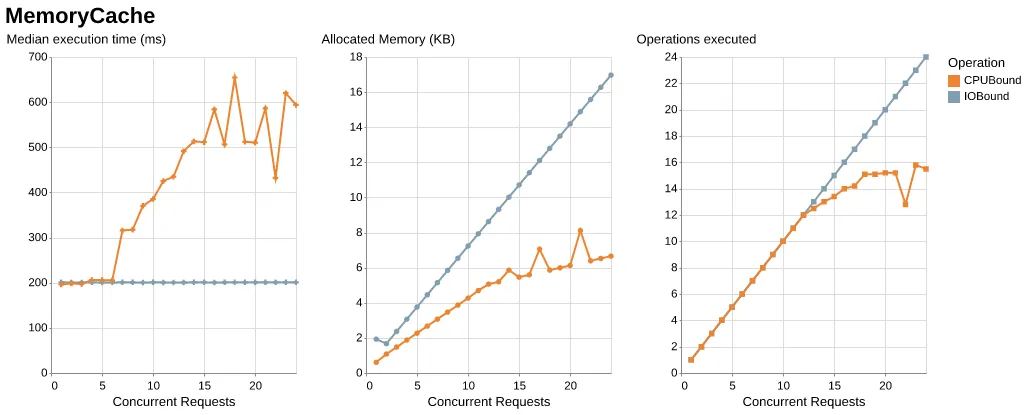

The graphs show almost the same thing as ConcurrentDictionary:

-

For CPU-bound operations, as the number of parallel requests grows, the median time increases by 3-4 times.

-

For IO-bound operations, the number of executions also grows linearly: as many parallel requests, as many times the ExecuteOperation method is executed.

Testing HybridCache

The next candidate is HybridCache. This library appeared with the release of .NET 9 and combines L1 (local, IMemoryCache) and L2 (persistent, IDistributedCache). At the API level, everything looks the same as in IMemoryCache:

return await hybridCache.GetOrCreateAsync(CacheKey, async (cancellationToken) =>

{

return await ExecuteOperation(Operation, cancellationToken);

});

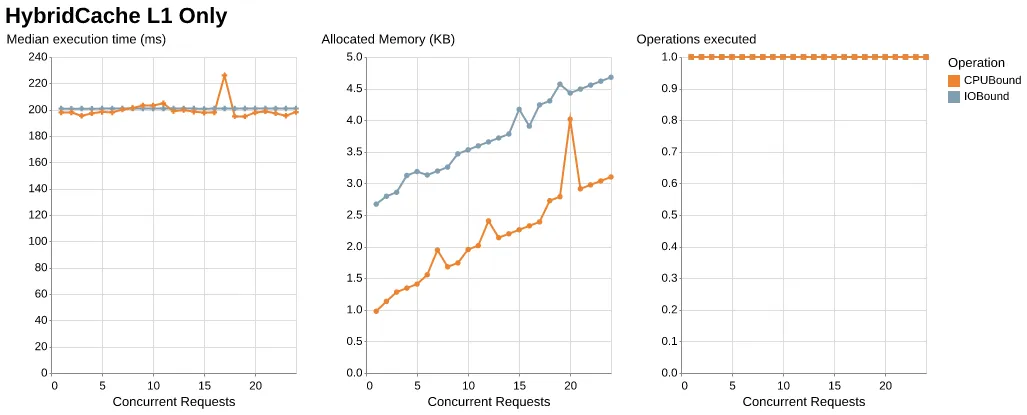

The key difference is that HybridCache has built-in cache stampede protection, but only within a single process. If you’re interested in how exactly it’s implemented, check out the GetOrCreateAsync and GetOrCreateStampedeState methods.

When using only L1, we finally get cache stampede protection, which is confirmed by the benchmark results – the number of ExecuteOperation calls is always 1.

But, as I already mentioned, this protection is only at the process level. If the service has multiple replicas, each replica will execute the ExecuteOperation method.

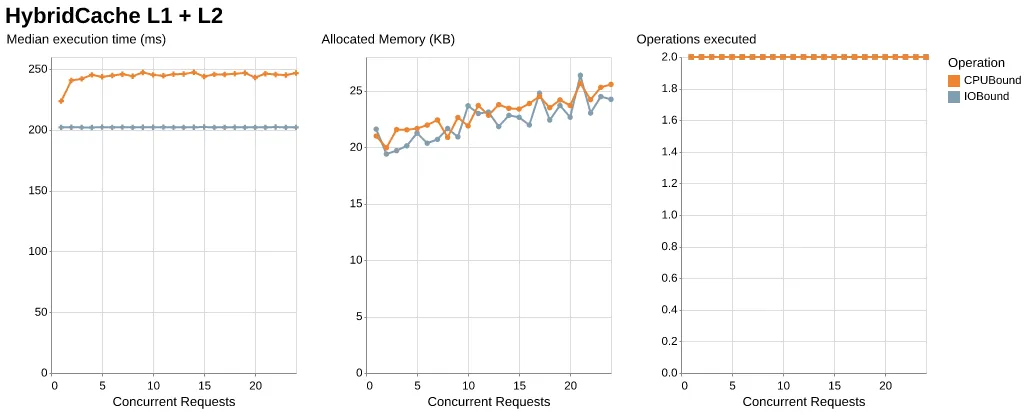

Having an L2 level won’t help with cache stampede: HybridCache doesn’t have a distributed lock mechanism. Although .NET developers agree that it would be nice to add such functionality sometime in the future.

Conclusion

-

Don’t use ConcurrentDictionary and MemoryCache without cache stampede protection. In high-load applications, this will definitely lead to excessive execution of “heavy” operations.

-

If you have a single replica, just use HybridCache – its functionality will be enough.

-

If you have multiple replicas and it’s acceptable to execute the “heavy” operation several times, HybridCache is still suitable.

-

If you have multiple replicas and executing extra “heavy” operations is unacceptable, you can

-

Apply Cache TTL Jittering – adding random time to the cache key’s TTL value so that TTL is not the same. This doesn’t guarantee protection from cache stampede, but significantly reduces the probability of synchronous updates on all replicas. In some libraries, such as FusionCache, this functionality is available out of the box.

-

Apply the Single Flight pattern at the L2 level. In this case, only one replica will execute the “heavy” operation. The others will wait and read the already updated value from L2. Unfortunately, I haven’t found ready-made libraries for distributed caches that implement this functionality. If you know of any, please write in the comments.